AI-Powered Brain Decoder Translates Thoughts Into Text

Scientists Enhance AI Technology to Help People With Speech Disorders Communicate

Scientists have made significant improvements to an existing AI-powered brain decoder, enabling it to successfully convert thoughts into text. This breakthrough could offer new communication tools for individuals with aphasia, a neurological disorder that affects a person’s ability to speak or understand language, researchers stated.

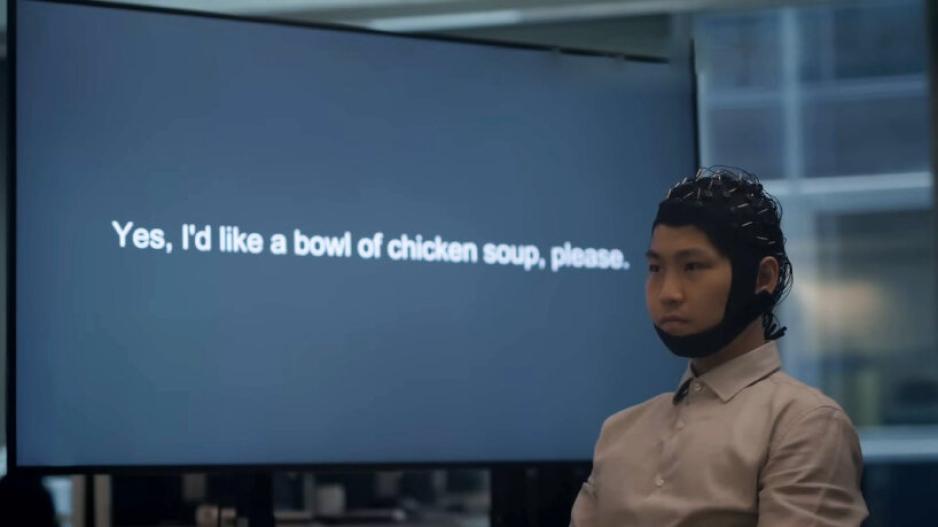

The brain decoder uses machine learning to translate a person’s thoughts into written text based on brain activity patterns.

"Aphasia patients often struggle with both language comprehension and speech production," said Alexander Huth, a computational neuroscientist at the University of Texas at Austin (UT Austin) and co-author of the study.

In the study, published in the journal Current Biology, Huth and co-author Jerry Tang, a graduate student at UT Austin, explored ways to adapt the brain decoder for individuals who cannot actively engage in speech-based training.

"In this study, we asked ourselves whether we could do things differently. The idea was to determine if we could transfer a brain decoder trained on one person's brain to another," Huth explained.

To achieve this, researchers first trained the brain decoder on a group of reference participants. These participants underwent functional MRI (fMRI) scans while listening to 10 hours of narrated radio stories.

Next, the team developed two separate transformation algorithms using this reference data, applying them to a new set of target participants with aphasia. One algorithm was trained on data collected while these participants listened to 70 minutes of radio stories, while the other used data from 70 minutes of silent short films by Pixar, unrelated to the radio stories.

Using a technique called functional alignment, researchers mapped how both the reference and target participants' brains responded to the same auditory or visual narratives. They then used this data to train the brain decoder to work for the target participants—without requiring extensive hours of training data from them.

The team then tested the trained decoder on a short story that none of the participants had previously heard.

Although the decoder’s predictions were slightly more accurate for the reference participants than for those using the transformation algorithms, the words it generated from each participant’s brain scans still retained semantic similarities to those in the test story.

For example, in one part of the test story, a character complained about a job they disliked, saying: "I'm a waitress at a cafe. So this is not… I don't know where I want to be, but I know this isn’t it."

The decoder using the film-based algorithm predicted: "I was at a job I found boring. I had to take orders and didn’t like it, even though I did it every day."

While the decoder did not produce an exact match, the predicted phrases conveyed related concepts, rather than reproducing precise words, Huth explained.

"The truly amazing thing is that we can achieve this even without using language data," Huth told Live Science.

"This means we can collect brain activity data simply while someone watches silent videos, and then use it to create a language decoder for their brain," he added.

Using video-based transformation algorithms to adapt existing brain decoders for aphasia patients may help them express their thoughts, the researchers noted.

The findings also reveal an overlap between how the human brain processes ideas from language and visual narratives.

In other words, the AI-powered decoder helps uncover how the brain represents certain concepts in similar ways, even when presented in different formats.

Next, the research team plans to test the transformation algorithm on aphasia patients and develop an interface that will allow them to convert thoughts into language, helping them communicate more effectively.